“There have always been ghosts in the machine. Random segments of code, that have grouped together to form unexpected protocols. Unanticipated, these free radicals engender questions of free will, creativity, and even the nature of what we might call the soul.”

In the movie I Robot, based on the book of the same name by Isaac Asimov, Dr. Lanning coins the term “Ghosts in the machine” and goes on to explain why robots behave in an unexpected way and not as they were programmed.

These ghosts aren’t limited to sci-fi. In fact, they’re something we deal with every day in software development. As technology has become more complex, we’re faced with deviations that mean software doesn’t quite behave in the way we expect it to — just like Dr. Lanning’s robots.

We’re not quite at the point where autonomous vehicles could develop their own will but it’s true that computers, robots, vehicles, and other programmed machines create such ghosts of unexpected behaviour. Understanding why these glitches happen is a vital step in reducing their frequency and handling them when they do show up.

Let’s explore some of the common reasons why software deviates from its intended behaviour.

Untested combinations of events

Testing a new vehicle is a very complex task. A vehicle model may have a high number of variations depending on the feature set, country of destination, and other parameters. This results in a variety of different versions of the vehicle software for a single model year, creating complex testing scenarios.

Despite a rigorous testing process, which includes specific combinations of events, caveats still exist. This is because it’s difficult to predict all of the different scenarios a vehicle will face as there are so many variables that could affect the software at any one point.

The use of artificial intelligence in the testing process is a potential solution to this as it can help come up with combinations of events a human might not think of. The AI can create new scenarios based on the expected interactions of the code. This helps developers find new edge cases they may not have come across during a manual testing process.

Testing doesn’t always reflect real life

As well as an untested combination of events, there’s also the chance that a test suite might not cover a real-life situation. Tests might cover a very specific and narrow band of scenarios that can be predicted and controlled in laboratory testing, but life out on the road is often unpredictable — especially when you take into account weather conditions, road type, number of passengers, etc.

After production, continuous monitoring of the software behavior, while the vehicle is on the road, can give information about real-life situations. This gives the tester a more accurate view and insight into the running code instead of a birds-eye view. AI technology can support this as the car is in use.

Glitches are often incorrectly written software

What the world refers to as a computer glitch or a software bug is often an incorrectly written piece of software. This can cause unforeseen behaviour that could lead to a software update or recall.

The reason for the incorrect software may vary. It’s not because of bad developers, but because writing software for a vehicle is incredibly complex and can incorporate a lot of factors that can collide with each other. Perhaps a developer or architect neglects to take into account a specific input or measurement that may affect the behaviour of the specific function or module.

As vehicles transition to a set of computers (ECUs) on wheels, it’s clearer than ever how real life can throw up surprises that just can’t be replicated manually in the lab. Mistakes happen, making it vital that automakers adopt more testing and debugging capabilities. Data also plays a role here and it’s crucial to be able to receive as much information as one can throughout the software lifecycle, all the way from development to maintenance.

Incorrect data

With incorrect data from the network, a car’s software might behave strangely. This is because it’s expecting specific types of data so it can react accordingly. If the data is incorrect, the car will be unable to respond in the manner it was originally designed. The reason for the incorrect data could be faulty flash memory, a sensor malfunction, or a bit error in a message on the network.

Some mitigation mechanisms are in place to overcome the problem of misinformation due to bit error — such as code correction algorithms, CRC data validation algorithms, ECC flash integrity checks, and more. More types of data range limitations and checks are suited to verify the correctness.

Although these types of mechanisms are in place, even with the “correct” data, an error may arise from an unfamiliar combination of information. This could be something that seems so illogical to the human eye that it would have never been considered during the testing process. So even though the code is written correctly and was tested successfully, an error can still occur.

Data reception issues

A problem with data reception can cause lags in a system, which consequently causes operational delays. This can also be fatal if a safety system doesn’t engage in the time it should. Such a scenario may cause brakes not to work when they should or airbags that don’t deploy in time to ensure occupant safety.

Latency and lag in the propagation of data over the network are issues that are hard to overcome and may pose a grave danger to the vehicle, passengers, and bystanders.

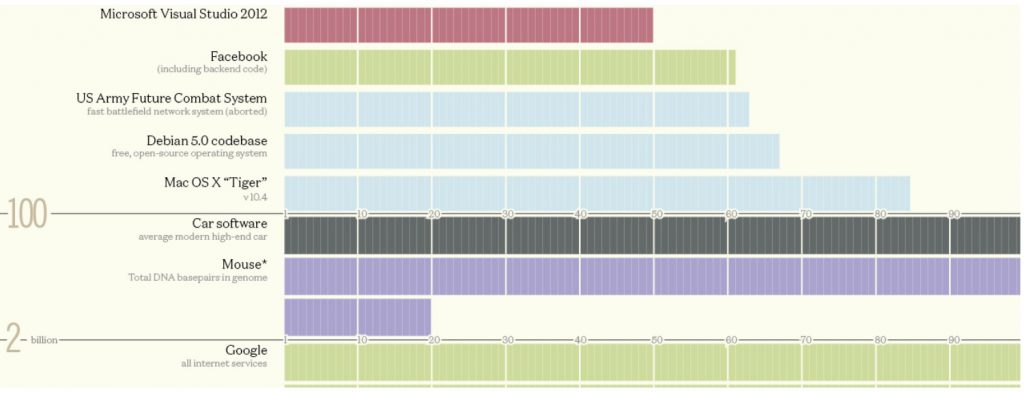

Million Lines of Code — Information is Beautiful

Modern vehicles are software on wheels, and they’re getting more complex all the time. The average vehicle currently has 100 million lines of code (LOC), but this is forecasted to grow to 600 million LOC for a Level-5 autonomous vehicle. With more LOC, the chance of encountering ghosts in the machine becomes even greater. Understanding how these glitches occur and what vehicles are commonly recalled for can help us solve these problems before they get out of hand.

Leaning on artificial intelligence technology to support the development process is key when it comes to automotive software. Presenting new ways to debug and get a clearer view of what’s happening in real-time is becoming imperative in order not just to develop a better, safer vehicle but also to shorten the time from when a software bug occurs to the point of finding a solution and deploying it to the cars on the road, incorporating it with the ability for fast deployment is even better.

Glitches don’t always present a safety concern, but they should be treated seriously. Instead, they manifest as small issues that can cause frustration for drivers. Reducing the effect of these glitches on the end-user is vital for manufacturers. Even in cases where a glitch does appear, an over-the-air update is often enough to fix the problem. It’s also important that automakers are able to quickly roll back the software to a previous version should a glitch appear following an update.

If you’d like to learn about how Aurora Labs can help you identify potential issues and improve the testing process during development, book a demo here.

5 min read

5 min read